talk series on a dense topics in programming languages. As I start every single one of

these talk series--these talks in the talk series with, I would like to just make a quick

plea for some--but for, for people who are interested in various topics in programming

languages to come and give talks. I see a lot of people who have given talks but I see

a lot of people whom I know, know a lot about a lot of topics and have not yet given talks.

So please come up to me and tell me, "I would love to give a talk." And we can set something

up.

Today we have a talk on a topic which should be near and dear to the heart of every

Googler who writes C++ programming. Because this is a--this is coming down the pike really

quickly and everybody needs to know about it. It's from Lawrence whos given several

talks on C++ standards. He's very involved in the C++ standards process.

And today he's

going to talk to us about multi-threading in C++ and the--and the changes that the standard

has in store for us with respect to it. Lawrence. >> CROWL: Can you hear me? Good. I'm going

to start of with the introduction.

But my real reason for starting with this slide is

to let you know that when one of these types of slides appears, that means I'm about to

make a significant topic shift. So if you've been sort of sitting on a question, one of

those topic shifts would be the time to ask it because I'm going to move on to something

completely different. The first thing I want to talk about is where this all fits in. There's

a quite of number of changes to C++ coming with the next standard.

And one of the major

goals is to extend C++ into concurrency. Right now, it's a serial language and that's no

longer acceptable. But an important feature of C++ and one of the major reason its success

is, it lives within the computational environment. You can include the system headers, all this

kind of things.

So C++ is connected to the rest of world, it's not an isolated language.

And we want to preserve that feature as we move forward into concurrency. And finally,

C++ gets a fair bit of its power from being able to let good library writers write good

libraries. And we are going to be unable to provide the right parallel solution. So what

we want to do is make sure that what we provide enables library writers to do the right thing

for their application or for their industry and move forward from there.

So what I am

going to show you today is for the most part as start not an end. And for this talk, what

I'm trying to do is just basically outline the primary features. I can't go into all

the details but I can give you an overview of where things are heading. And not all of

this is stable yet.

There's active debate within the committee about what to do. Some

of the syntax hasn't been chosen, some of the semantics hasn't been chosen. And because

of all that debate, I would like to get from you, feedback on where you think we're doing

things right, where you think we're doing things wrong and so forth. Finally, as this

is dealing with the C++ standard, I have a set of disclaimers here which means basically

that you should take what I say today as a hint not as an answer.

So everybody has heard

the story about multi-core processing and how it's coming and we're not going to get

single cores that are going to go any faster so all the chips are being multi-core. And

we're going to have to start dealing with multi-threading. And that's part of the story

of why we need to add threads. The other part of the story is that, it's now a connected

world.

We have the--each computer talks to the internet, and some of the ways you write

programming is better in a concurrent world even if you're still on one core. And so we're

looking at those kinds of issues. The other issue, which applies less to Google's specific

applications but does apply to other application domains, is some programs require very large

machine resources. You know, hundreds or thousands of processors.

And those you can't do without

some form of concurrency. So people are getting by today and what do they get out of the C++

standard on all this stuff. Why isnt POSIX. Good enough? Well for one thing is, it's not

just POSIX, it's also Windows, it's also embedded systems, it's also main frames.

There is a

wide variety of machines out there. And while the model is dominated by Windows and POSIX,

it's not solely Windows and POSIX. So we'd like to create a portable expression of programs.

The other thing that, having this in the C++ standard does, is it creates a bigger community

to talk about parallel computing, concurrent programming and so forth. So some of the solutions

that people create, if they create it in a standard environment, it will spread to a

wider community, and a wider community can work on it.

And that's a big leverage on the--on

the work that all of us are going to be doing. The next big approach we're taking is to standardize

on the current computing environment. So we're not going to try and invent a totally new

model of parallel processing in a C++ community. The world is littered with parallel computing

languages that died.

And so we are going to standardize on a model that is well supported

by the current operating systems. And we have strong belief that those will be around for

quite a while. So we have one C++ thread, will be one OS thread. It's heavyweight, independently

scheduled, pre-emptive, etc.

So that cost model is exactly the same as what you are

used to with POSIX or Windows threads. The second major bullet point is its shared memory.

The variables of the threads are all shared among all the other--all the other threads.

There's no isolation of memory. And this too is in common with POSIX and Windows threads.

The big point we also need to make, is that we are not trying to compete with other standards.

There are standards from message passing. We're not trying to displace that.

There's

OpenMP which is a standard for, sort of, hierarchical loop-based programming. We're not trying to

replace that. We're not trying to replace automatic parallelization in compilers. So

we're really trying to sort of provide a standard access to the environment.

And we have a--we

have a, sort of, a split view of what we're doing on this. And this view is a little fuzzy

but two--two sides, change is necessary to the core language to make this all work. And

change is necessary to the standard library--they give you a library interface on some things.

Now this separation isnt entirely clean because sometimes they overlap. Some things

that look like a library interface are in fact the core language change.

So there--it's

a bit fuzzy there. But overall you can think of this split. And the core language, were

really concentrated on what is memory, what are variables. And when two threads touch

a variable at the same time, what happens? What does that mean? That's the kind of core

level changes.

And this core language change area is where I'm going to be spending most

of this talk for two reasons. One is, I know more about it and the second is that this

area of the language is more baked right now. The second area is in a standard library and

this focuses on how threads are created, and synchronized, and how we deal with, what happens

when a thread stops, how do we make a thread stop and so--and layers beyond that. Yes?

>> So, when you've--say--let's say I want to know if directly--if reading the strings

simultaneously, [INDISTINCT] let's just say, the difficult [INDISTINCT] it core language

or library.

>> CROWL: Well, the string is an abstraction

put... >> [INDISTINCT] library.

>> CROWL: ...Put out by the library. So there's actually a slightly subtle answer here. But

it's important--the answer is that, if you are talking to the same variable, then you

must do the synchronization.

The standard library by design is not going to try and

synchronize a variable. On the other hand, if as is common with many implementations

of string, they have reference counted objects. Then the standard library is insuring that

any object shared between two variables is properly synchronized. So you are responsible

for the shallow synchronization on the variable, but any deep synchronization is the responsibility

of the library.

>> Can you repeat the question given earlier?

>> CROWL: I'm sorry. The question was whether or not things like string are synchronized

in the library or not. And so the answers is, halfway in between. Yes?

>> Will there be any thread local storage? >> CROWL: The question was will there be any

thread local storage, and I ask you to wait a bit.

>> Okay.

>> CROWL: So my first topic is, "What is memory?"

The traditional notion of shared memory that everybody thinks about is that, you write

a value to memory and it's instantly visible to all the other threads. This is sort of

what we thought about and in the past, what people tend to think about when they think

about shared memory. But it doesn't work. Among other things it implies faster than

light communication and we've pretty much ruled that out over the last century or so.

It doesn't match the current hardware because they have speed of light limitations as well.

And in addition, current modern compilers even in a serial case will apply optimizations

that make that not true.

So for all of those reasons you're not going to get an instant

shared memory. So there will be some lag between, when you write a variable in one thread and

when somebody else gets to see it. And we're sort of nominally terming this message shared

memory. So you have two threads and they want to communicate.

One thread doing it's writes,

those writes are sort of held up in limbo, until you write to an atomic variable or write--or

acquire a lock. And then those get communicated until another thread accessing that same place,

picks those writes up. So two threads are mediated through special variables.

>> Is it, were these release consistency or acquire consistency? Yes.

>> CROWL: It's detailed. I'll get to that a little bit later.

So the mechanism here

is acquire and release. So a variable when it writes to--a thread, when it writes to

a variable says, "I release all of my writes to this variable." And then somebody coming

along later says "Okay, I want to do an acquire from that variable." And all of the writes

that the one thread did are now visible in the second thread, okay? And so we have a

store release and a load acquire which is sort of the base mechanism of how this stuff

happens. So typically you release things when you do a store and you acquire things when

you do a load. There's also, yes? >> [INDISTINCT] does it mean that another

thread has to acquire [INDISTINCT].

>> CROWL: I'll get to that in a minute. Modern

machines also have the notion of a memory fence. And the proposal right now for C++

does not have memory fences in them. And the, the reason for this is partly the definitional

process, partly that there are some proposals that are in the early stages.

We don't know

quite what's going to happen. But the major thing here is--to take away, is that the current

machines have fences but the current proposal does not. And we don't know how that's going

to settle out. Partly we keep talking about them because in terms of implementation when

we say, this C++ language construct implies these certain barriers on modern machines--these

certain fences on modern machines, we're trying to get an estimate of the cost.

The main problem

is there might be--we might inhibit future architectures if they--if we put fences into

the language. So because we are communicating through shared memory, the sequencing becomes

an issue so within each thread we have a sequence of operations and then we write to shared

memory. And now we want to ask questions about how do threads sequence? How are reads and

writes between threads ordered? But in order to solve that problem, we have to first back

up to what happens in a single thread case. And the old model for sequencing was the sequence

points in the old C and C++ standards.

And those are not well founded. Nobody really

quite knows what they mean, various other problems there. So the committee has done

a significant amount of work. And sequence points are now gone and there a couple of

ordering relations.

The first is sequenced before, where there's a strong sequencing

between operations. And then the next one is indeterminately sequenced where there's

a sort of a weak. It has to be either entirely before or entirely after, but I can't tell

you which. And the key idea here, the key point of the standard is, that if you do a

couple or writes or a read and a write to a variable and those operations are not sequenced,

you now have undefined behavior, okay.

So we dont try and make it defined and it's

up to you to do the right thing--you and your compiler vendor, if they give you warnings.

So--but that's undefined behavior if you dont properly pay attention to your sequencing.

So that's the sequential case. Now, we get in to the parallel case. And the main relationship

we have here between operations is sequenced before. And that comes from the serial case

by adding to the serial case, the acquire and release operations on variables which

then get picked up in another thread.

So if you have intra-thread ordering, you zip over

to an atomic variable, pick that up in another thread and now you have--those operations

are now sequenced before each other across that connection through memory. Okay. And

put all of that together and you have a happens-before relationship between two memory operations

and different threads. And here's where the hard part comes in.

So data races. If you

have a write to--a regular variable, not atomic but a regular variable and some other thread,

either reads or writes to that same variable without this happens-before relationship between

those two, then you have a race condition and your program has undefined behavior. Now,

I want to be clear here, undefined behavior means yes, you just did order a thousand hogs

to be delivered to your front door on Tuesday, okay. Really the standard can't say anything

about what's going on because you're suddenly getting random word tearing, wild pointers,

all that kind of stuff.

So the standard really can't say what's going on. So you get undefined

behavior. >> But you also with--prevent useful non-deterministic

programs from the information, right? >> CROWL: So the question is won't you also

be preventing useful non-deterministic programs from happening. The answer is, no, that's

not true.

But you can't use regular variables to make that happen. You have to use atomic

variables to make non-deterministic programs happen. In that last slide, I sort of slid

by this, a memory location. And just waving your hands like that is not quite good enough

for the standards.

So we have a actual concrete definition. And that is a non-bitfield primitive

data object or a sequence of adjacent bitfields with some weasel wording. So the idea behind

all of this is to avoid having to do atomic read-modify-write operations to access bitfields.

So we're going to continue with the current compiler notion of read the bitfield and all

of the bits around it, change the part that has your bitfield, and write it back out.

And if there's any collision between two threads, that's a data race and you have a problem.

So, if you--if your bitfields really do need to be atomic then you have to go to extra

work to separate in memory. And for people that are working in binary worlds, this will

also change--the layout of your structures in memory could increase the amount of memory

you use, could change compatibility with existing libraries.

So, be careful in that location.

Now, this model of memory and data races and so forth does have an effect on optimization,

it's not entirely free. There are some speculative reads and writes that current optimizing compilers

have been known to do that will no longer be legal. And the reason is that when you're

compiling some function, you have no idea if it's in a multi-thread application or not.

You might not know that it's in a context where the code can't be moved around or the

writes can't be dropped and so forth. We believe that those speculative reads and writes will

not be a major--major impact on program performance.

We think its in the low single digit range.

But of course we can't guarantee that for all programs. There is, however, one case

where we had to have a special rule and that is the compiler can assume that a loop will

terminate. There are a substantial number of optimizations that involve pulling code

out of loops, in front of the loop, or behind the loop. And if the loop never terminates,

you can't really do that kind of stuff.

So, we made the rule that you can assume that

the loops--that the compiler can assume the loops terminate. For the most case this is

nearly always true and in the cases where the loops dont terminate, they typically

have shared memory operation or locks or IO. Or some other thing that would solve the problem

as well. This really only affects, tight loops that the compiler can't determine to terminate.

Yes.

>> What is a loop?

>> CROWL: What is a loop? Thats basically control flow art.

>> Is there something that these things tend to make interesting implications?

>> CROWL: Yes. So, the--in this case, the compilers does not have to determine that

it's going to terminate. And this allows a lot of the current optimizations to continue

to go through. >> So the definition of loops doesnt include

the control flow via exceptions? >> CROWL: So the--the question was, the definition

of loop doesnt include control flow via exceptions.

And if you create a loop via control

flow, that is not currently covered by the definition.

>> Its probably also worth reiterating that if you--if you perform some these optimizations,

your not going to be changing single-thread semantics [INDISTINCT]

>> CROWL: So the question was that if you perform some of these optimizations, you're

not going to be... >> Across loops.

>> CROWL: Across loops, you're not going to be changing single-thread semantics, and thats

correct. Okay. So we talked about memory and some of the interactions there, and we've

said that we're going to communicate reads and writes through atomics and locks.

And

now we're going to talk about the atomics. So we have to say, "What is atomic?" And what

we have is a set of atomic operations on a single variable. So, the operation on that

one variable is atomic and those operations will appear to execute sequentially on the--this--the

view on that variable is sequential to all threads. So they will see the same order of

events in the system for that one atomic variable.

So if thread A writes two values to an atomic

variable, thread B will see those two writes in exactly same order. Now, it may not see

both of them because there's a certain resolution problem, but it will--it will appear as though

there's an inherent sequential order. We do not use the volatile key word to indicate

this. Java does use the volatile key word.

C and C++ have a longer history and than Java

and we've been using the volatile key word for many years and we chose not to change

it's meaning. It still has the old device register meaning that it's always had. And

it does not indicate atomicity. But you can have a volatile atomic variable, which says

that there might be some external agent that changes this variable in addition to some

other thread.

So atomic is a notion between threads and volatile is a notion to the environment.

We also have a set of requirements on atomic variables, the first big one is static initialization.

If you are using these things to synchronize threads that may pop into existence, you dont

really want to worry about the thread starting before youve had a chance to initialize

the atomic variable. So one of our key criteria here was we wanted to make sure that the atomic

variables are statically initialized. We also want a reasonable implementation on current

and future hardware. So we didnt want to create some--type of atomic variable that

was inherently expensive on current hardware.

They're all going to be a bit more expensive

than regular variables but we dont want to have huge costs. We'd also like to enable

relative novices to write working code. Now, I want to warn you here that "relative novices"

is a very small group. The experts are the people that publish in Principles of Distributed

Computing and find errors in other papers on that same journal.

So, thats where the

experts are, and then around them are people that sort of can do a lot of it but aren't

really experts and incidentally I fall in that category. So, most C++ programmers are

not in the category where we expect them to be using atomics. Our final criteria--or one

of our criteria is to enable those people that write the Principles of Distributed Computing

papers to write very efficient lock-free code. We want to give them all of the tools to make

the hardware sing.

Because while that kind of code doesnt have to be written very often,

when it does have to be written it's performance critical. And we also wanted to leverage those

very bright people that know how to do this kind of stuff. So we want them to be able

to write lock-free libraries and give the rest of the world higher level data structures

that do the right thing where the users of those data structures dont have to worry

about the gory details. Yes? >> Question, is atomic a property of a variable

or is it a property of the--of the way you access the variable?

>> CROWL: The question was, "Is the atomic a property of the variable or the way you

access the variable?" Its a property of the variable.

Now, I'll get to that in a second.

So, the operations on an atomic variable have a couple of attributes, one is acquire which

we talked about, I want to get other memory writes, release I want to give my memory writes.

You might also do both an acquire and a release at the same time and then finally, you want

to do relaxed. Which is there's no acquire and no release implied. And thats where

you get the non-deterministic behavior. So, you're not necessarily synchronizing any of

the rest of the memory, but you still do get that sequential view of that one variable.

And the other memory ordering we get in here is fully ordered.

Where we get extra ordering

semantics beyond what arises from simply acquire and release. The problem in all of these atomic

variables is that too little ordering on your variables will break programs. But too much

ordering slows the machine down substantially and you need to find a happy balance. As it

turns out most programmers when they write this code, should be conservative.

And put

in too much ordering because then your more likely going to get correct code. And then

when you find you have a measured performance problem, you might want to go back in and

relax things a bit and use weaker ordering constraints. But, once you start down that

road, you need to take the attitude that you're writing public code and get lots of code reviews

and so forth to make sure that it's working. And part of the reason for this is that people

tend to think of a world in a consistent fashion, and once you start getting into weaker memory

models, you don't get that consistency.

And in particular if you look at this code example,

two threads independently write to independent variables, and then two other threads read

those variables. And do they get a consistent view? That is, is there a system wide total

store order on X and Y. Some hardware does provide this, other hardware does not, and

systems that don't provide it can be faster. But people have a harder time programming

without it.

So what we have done? What we've chosen to do is--for the fully ordered atomic

operations, we have made them sequentially consistent because this is where people seem

to be most comfortable in terms of getting correct code. It's a little bit less efficient.

Slightly weaker models don't seem to have a good formalism. We sort of wave our hands

and can say it sort of like this, but we don't really have a good formalism. And we're trying

to make sure that the language has a good formalism.

Because a good formalism helps

programmers and compiler writers resolve any of their differences. And then we have weaker

models that you can get to through explicit programming. So I'm going to switch gears

a little bit, now that we understand the background for the atomics, I'm going to sort of build

up what they look like in code. Yes? >> [INDISTINCT] in the previous example, all

the operations of X and Y were ordered by [INDISTINCT]

>> CROWL: Yes.

So if you use the operations that are syntactically most convenient, they

will be ordered. Now if you start writing more robust code you can weaken that.

>> Does order apply only to the--to a single variable or does it apply to the entire...

>> CROWL: It applies to all variables. So if you are using only the fully ordered operations,

you will have a sequentially consistent view of all the atomic variables. But once you

start getting away from the fully ordered operations, things will get weaker and they

won't look sequentially consistent.

Okay. One of the problems that the C++ language

has to deal with is a wide variety of hardware. And in fact we have some older hardware and

some embedded hardware that may not have all the full hardware support that some of us

have become useful--used to. So the standard is built around one primitive atomic data

type that you really have to have hardware support for, and this is the atomic flag.

And it has test and set and like semantics.

Once you've got that, you can build up the

rest of the environment. It will not necessarily be the fastest thing that you ever saw but

it should be sufficient. And at this level of programming it's really down in the basics

and you want to stay away from this as much as possible. Passed that, we have a few basic

atomic types, a Boolean, a set of integers and a void pointer.

And you can build these

from that flag that I showed you earlier and the proposal before the standard shows how

to do that. And--but we expect people to go a little bit further than that. But those

basic operations have a set of C-level operations which look like function calls as I showed

you in some of the earlier examples, and as you here--see here on the screen. You can

use these same operations from both C and C++.

In C they'll look like type-generic macros,

in C++ they'll look like overloaded functions. But core thing is, you can write the same

syntax and the same things will happen. And those operations include the ordering constraints

that we talked about earlier. But we also have a C++-level to the operations.

And here

those atomic types look like classes. And we have a small number of member functions

and a small number of operators. And those are the fully ordered operations we talked

about earlier. So, when you write--do an assignment, you will get an atomic, when you do a compare

and swap that will be strong, when you do a plus equals operation that increment is

atomic, and it's strong--that is fully ordered.

But there's a problem here and that is, if

you look at a C++ class it comes with, sort of, this default assignment operator. And

that default assignment operator is wrong for atomic operations. Because the compiler

is going to generate a plain load and a plain store and it won't work. So, the simple answer

is, of course, write your own assignment operator and make that atomic.

But the problem is,

that if you write your own assignment operator under the current language rules that is no

longer a POD data type, and it's no longer guaranteed to be--to be compatible to C, and

remember one of our goals is to be compatible with C. So we couldnt do that either and

we can't really stop that assignment operator in C, in particularly C90. However, we've

made substantial progress in the current language with a bunch of new papers that allow us to

address this problem head-on and we can--from the C++ committee side, now prohibit the assignment

operator without violating compatibility with C. And I expect that if the C committee adopts

this work, they may well just prohibit the assignment operator as well.

So, one of the

things I just said right there is prohibit the assignment operator and the question is,

why do that instead of make the assignment atomic? And the answer is one, we didnt

want to interfere with the C view and the second thing is that people would see that

simple assignment operator and think that the reading and the writing together were

atomic. And given current hardware, we can't make that happen. We can make the read atomic,

we can make the write atomic but we can't do both together atomically. And so, we invalidate

the assignment operation so that users have to explicitly see the read and then do the

write separately.

Question? >> So if you are going to disallow the assignment

operator, why do you have +C [INDISTINCT] why not just say, no operator [INDISTINCT]

>> CROWL: Well, let me be clear on which assignment operator I'm talking about. When I talk about

assignment this--that assignment operator, I was talking about the assignment from atomic

to atomic. We still report--support an assignment from an integer to an atomic.

>> Okay. >> CROWL: Right.

And so all of these previous

operators that you saw here, they're all taking in value, so three--so you can read from a

atomic variable or you can write to an atomic variable with an integer. What you can't do

is simultaneously read from an atomic variable and write to an atomic variable in an--in

an atomic assignment operation. >> So it's like you're prohibiting--you're

prohibiting an atomic on the right-hand side of...

>> CROWL: I'm prohibiting an atomic where the--both the left-hand and the right-hand

are atomic variables. So question? >> So, are you saying that line order and

stuff that doesnt work? >> CROWL: No.

That all works. Those are--so,

basically what we're looking for is operations that have only one L value, right? If the

operation has only one L value then we can make it work. The traditional C++ assignment

operator that's automatically created for you has two L values, one on the left and

one on the right. And thats what we can't handle.

>> What if ++A--do you have to read [INDISTINCT] or A + 1 right? Did you have to be [INDISTINCT]

>> After, after that [INDISTINCT] a lot...

>> CROWL: It's one, it's one atomic variable

and we can take advantage of--so, let me repeat the question. Doesnt ++A have that problem,

when we have to read from it and write to it? And the answer is it's one L value and

we can use fetch and add hardware to make that operation work. There is--there is a

change here that's a bit subtle for the plus equals operation as you would normally define

it in C++, it would return a reference to it's left-hand side. For these atomic operations,

they do not return a reference because then you might read from it again and get bad results.

So, for these atomic operations what they return is a value, which is, surprisingly

enough, what C does.

So, let's--we kind of sort of come back a little bit. So, you had

a question? >> There was on this [INDISTINCT] planned

assignment operation. For instance a equals a+4 [INDISTINCT]

>> CROWL: Okay. The question is, is there an equivalence between A plus equals four,

and A equals A plus four? The answer is no.

The A equals A plus four, is two separate

atomic operations. As a read, a regular add-on values, and then a write. So, there's very

much a difference between those two. They're different operations.

So what--question in

the back? >> Can you do arrays? Can you do arrays?

>> CROWL: Can I do erase? I'm not sure I understand the question.

>> Array, array access? >> CROWL: Oh. So, can I do arrays? There's

no atomic operation over arrays but you can do an atomic--you can have an array of atomic

variables. Question? >> I guess I have to go back to my original

question. With all of the differences between what is sort of intuitively expected by the

C++ programmer and what this actually provides, did you ever discuss not supporting operator

overloading [INDISTINCT] >> CROWL: So, the question is with the differences

between sort of traditional C++ symantics here, did we ever discuss not having any operators?

The answer was yes, but nobody wanted really to do that.

Particularly among the committee

whenever we wrote code, we almost never wrote out big function calls, we did plus equals.

And so for--in a lot of cases we just thought that it would be too much trouble.

>> Okay. >> CROWL: Your point is valid though. And

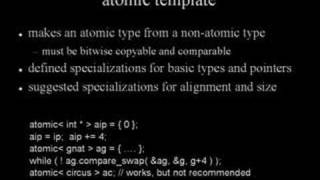

so in addition to the C++ view of these basic types. We also have a atomic template which

gives you a uniform way to name the types.

So, the other types were atomic_int. If you

want an atomic_char, there was--it was atomic_char. And those are two different names and really

hard to manage from within templates without writing a bunch of specializations and so

forth. So, what we have now is an atomic template.

You can write atomic left angle and right

angle and you get all the same semantics. And now you can put atomics inside of templates

and make those things go forward. There are however some semantic restrictions on what

you can put inside of this atomic template. What types you can put in there.

The primary

ones being, it must be bitwise copyable. Because we have to rely on those semantics with the

underlying hardware. And they must be bitwise comparable. The compare and swap operation

is a bitwise comparable operation.

And that template has specializations into the basic

types and we also suggest how to implement this so that there are specializations onto

the hardware for arbitrary types. So one of the things in C++ standard library is this

notion of a pair. So if I have a pair of two ints, I'd like to be able to put that into

an atomic template and have everything work, and with this formulization, it will work.

So, any small type like a gnat, you can wrap with atomic and things should work out pretty

well. If however, you wrap the entire circus with an atomic, we cannot implement that in

hardware.

So what we will doing--be doing is putting in some locks around it and stopping

the whole world while all of these things go on. And so while at work we recommend you

don't do that, so use atomic variables with care. The other thing about atomics is there

are certain properties of freedom that keep getting labeled with these atomics. So I want

to go through those because they do affect the interpretation of C++.

The first and foremost

principle is whether or not an atomic operation is lock-free. And this is important because

if it has a lock and you crash it in the middle of the--crash the program in the middle of

the operation or crash the thread in the middle of the operation. You now, have somebody holding

on into a lock and you could get a chain of failures because that lock isn't released.

Lock-free means that there is always somebody making progress even in the presence of crashes,

okay. The next stronger capacity us wait-free and what that means is that every operation

will complete in a bounded amount of time.

So you can get lock-free but it may be arbitrarily

long to make some of those operations finish. They will finish but it may take arbitrary

amounts of time. Wait-free means, they won't take arbitrary amounts of time. And address-free,

means that the atomicity of the--of the operation, does not depend upon the address, okay.

This

is particularly important when you have two processes sharing memory at two different

addresses. So you have physical memory at two different virtual address spaces. And

so, this is--this is where that property comes in.

There are no support in hardware for lock-free

atomics on big types.

So those big types must necessarily be implemented with locks and

those locks cause problems when you get to signals. So you don't want your atomic variables

to be--have locks in them if you are also using those variables with a signal, because

they'll come in at arbitrary times. So we give a means to be able to test whether a

certain atomic variable is implemented in a lock-free manner or not. So--well, we can't

guarantee that the atomic variable you want to use to communicate with your signal is

lock-free up front in the language.

We can guarantee that it will give you that ability

to test it. And for--and because certain processors implement lock-free stuff through dynamic

libraries that are independent upon the individual processor, we can't even know a compile time

whether or not certain types are going to be lock-free. So, it's a dynamic test for

that. We also have pre-processor based static tests that will tell you whether or not a--the

basic types are always lock-free or never lock-free and if they're--if they're not always

lock-free and not--never lock-free you have to fall back to the dynamic test.

The second

layer of freedom that I talked about was wait-free and again you need hardware support to make

these things work. But that support is substantially less common and when people write this kind

of code they end up writing fairly processor-specific code and it's difficult to write portable

programs in this realm. We also found that few of the people that were wanting to write

with these atomic variables cared--some did but most didnt. So, we're going to leave

this property unspecified in the standard.

We won't say whether or not it's--it's--it's

wait-free. And the third freedom there was address-free atomics. Inherent in this is

that there are two different addresses for the same variable, and thats outside of

the C++ standard. C++ standard has no way to say that.

But we recognize that people

in fact do write C++ code that shares memory between processes and does so at different

addresses. So we're going to try and help out here. What we're going to say is, if an

operation is lock-free, it must also be address-free. Thats the intent that we will put into the

standard, we can't make it a firm requirement in the standard because we have no way within

the standard to test that.

But thats what we're going to say. We dont believe that,

that will be a problem for any of the C++ compiler and system vendors. But that should

then give people riding multi-process programs a leg up. And it should also help people that

are mapping the same file into two different places in the virtual address space a leg

up.

Yes? >> Have you folks, thought about maybe incorporating

things like transactional memory? >> CROWL: The question was, have we thought

about incorporating transactional memory? The answer is yes, the committee has thought

about that to the extent that we're pretty much agreed that while it's an interesting

area of research, it's still an area of research not yet stable enough for us to put into a

C++ language standard. >> [INDISTINCT]

>> CROWL: Yeah, yeah. So I want to--I want to point that out, some people are starting

to get nervous that I'm not wrapping up. This is a 90-minute talk, so if it--if it--if it

appears I'm not wrapping up it's there--it's that--thats there for a reason.

>> You actually have a little bit more than I realized which is for inter-transactional

memory.

You actually do a lot of atomics for entire [INDISTINCT] and you could only imagine

that being replaced by transaction mechanism [INDISTINCT].

>> CROWL: Right. But the atomicity we have over entire struct is basically to load and

store it. And you can do a compare and swap--very limited operations.

>> Just--just, you know, just [INDISTINCT]. >> CROWL: Yes.

>> We have that.

>> CROWL: So the next thing is that we have

these variables and in this multi-threaded world, what happens to the variables. We've

got three major areas, the first is thread-local storage--question earlier, we are introducing

thread-local storage. We also have to address the dynamic initialization of static-duration

variables i.E. Global variables and function local statics.

And we also have to address

the destruction of those variables. The first thing we're going to do probably the easiest

thing is adopt thread-local storage. There's lots of existing practice for this already

for POD structure, simple C types. And we do this the same way those compilers did,

we introduce a new storage duration and a new storage class key word.

Each variable

is unique to its thread it--but each variable is--is accessible from all of the other threads.

So if you take the address of your thread-local storage and you pass that to some other thread,

it can read and write to your variable. And the consequence of this is addresses of thread-local

storage are not constant, those are created for each thread. We are also extending this

to allow dynamic initialization and the destructors for variables in thread-local storage. And

we've defined this very carefully to permit dynamic or lazy initialization of these variables

because you--what you dont want to have to happen is when you start up a thread--it

to have to instantly run around initializing thousands of thread-local variables, that

it may never reference in the entire lifetime of the thread.

So, we're very much trying

to be able to set it up so that you only have to initialize the variables at the intersection

of the set of variables and the set of threads. So, we want a sparse use of memory and initialization.

>> Do you initialize one when you take the address of one?

>> CROWL: The question was, do you initialize it when you take the address of it? Well,

the--what the standard says is that it will be initialized before first use of that variable.

And so, it gives the compiler a little freedom to push things a little further ahead. So,

what's permitted for instance is if you use a thread-local variable in the middle of a

loop, it might move that initialization out of the loop and do it at the top of the function.

So, there's a little leeway in where compiler vendors can start that initialization. We

can sort of do this all in the compiler but OS support would provide us a little more

efficiency.

If I have a global variable that requires dynamic initialization, say it has

a constructor. This gets tricky because what if I have two threads running and they both

want to see these things initialized, or they both try to initialize that. Without synchronization

we potentially have data races. With synchronization we potentially have deadlock.

And so, we're

going to break this part into two problems. One is for function-local static variables

and one is for the names-base type variables. And the reason is function-locals already

have this notion of lazy initialization, and we're going to capitalize on that. The first

thing we're changing is--at the current standard of course doesnt say anything about synchronizing

these initializations.

We have decided to standardize on making sure that those things

are synchronized. So, two threads who decided to initialize the same thread-local variable--thread-local

static will be synchronized. But while the initializer is running, they will not be holding

a lock, okay. You may still--other threads maybe blocked waiting for that to happen but

there will be no new lock introduced and held for that duration.

And this is made possible

for--by a new algorithm developed by Mike Burrows here at Google. And Google has released

this code into the wild under a--under a friendly license. And basically, what this algorithm

buys us is we get the--on average a of cost one additional non-atomic memory load to make

all of this work. It's really a quite subtle algorithm.

For non-local static duration variables,

that is, say global variables. We've got it a little bit tricky here. The current language

doesnt let you--gives you sort of an indeterministic access to variables that are defined in another

translation unit. So, the only thing that's sure is what you access within your own translation

unit.

What we have done is change the current definition where it's either zero initialized

or fully initialized to, you dont know what you get. So, what was, sort of, indeterminate

is now undefined. So, all you can really rely on while you're initializing the global variables

in your translation unit, is other variables in your translation unit. What that allows

us to do is concurrently initialize the variables in two different translation units.

Because

as it turns out concurrent initialization in some application will help substantially.

>> How do you know, [INDISTINCT] like this, how do you know the vector itself doesnt

rely on some other variables from another translation unit?

>> CROWL: So, the question is, whether or not, vector relies on variables for some other

translation unit. And the answer is the implementer of vector has to make sure they dont that.

So, it... >> So, it's supposed to [INDISTINCT].

>> CROWL: Yes. So, it will have to be part of the contract between you and your library

vendor about what they do in terms of their implementation.

Destruction has much the same

property. So, we also say that when you destruct, you can only rely on those same set of variables.

The extra complexity here is what happens to function-local variables that were initialized

while initializing a global variable. And the answer to that is interleave them properly

and save it up for destruction. >> Are the destructors run on the same thread

as the constructors? >> CROWL: The question is, are constructors

run in the same threads as--are the destructors run in the same thread as the constructors?

And the answer is, you dont know, okay.

And I'll get to that reason in a second. When

I get to termination. Yes? >> [INDISTINCT] I dont--I dont understand

how you can do this apparent version [INDISTINCT] the deadlock. Because if anyone is taking

these threads of any variable that [INDISTINCT] to initialize then they could depend on that

thing that held somewhere [INDISTINCT] >> CROWL: Right.

And so, this model implies

that you arent going to the storing address sees in global objects that cross those module

boundaries. So, we had a lot of discussion over this. And this is sort of the working

compromise between basically forcing sequential initialization of all of the variables. Which

means that when your application starts up, you have this long period of being able to

only exploit one thread, versus basically being eliminating global variables because

of the synchronization problems.

So it's not an ideal compromise. I'd be really happy to

sit down with people and if there's a better idea, or you think that this isnt going

to work, please sit down with me. We'll go through it and try and make sure what happens,

because we certainly dont want to release a standard that can't work. But we understand

that what happened--this model here will invalidate some current codes.

We dont think it will

be too hard because what we've made undefined was previously indeterminate.

>> Indeterminate also is the common cause of the mysterious [INDISTINCT]

>> CROWL: And also a common cause of mysterious [INDISTINCT] so, now we're going to move into

the sort of a library phase and this should go quicker. The model that we have for threads

is a fork and a join. And you fork a function to be executed and then later you come through

and join. And because of the magic if C+++ operator paran, paran, we can make--make the

join look sort of like a function call.

This notion is very standard about what's--from

what's being supported in the current operating system world. That view is common. We're going

to stick to it. We're also going to support functor like objects so that you arent totally

stuck trying to communicate through a narrow POSIX only passing in your function pointer

type of interface.

>> Why are those extra parentheses there?

>> CROWL: Talk to me. So, the question is, "Why are those extra parentheses there?" Talk

to me afterwards and I'll explain to you bizarreness in C++ syntax. Those extra parentheses have

nothing to do with threads. >> [INDISTINCT]

>> CROWL: The next thing is the scheduling of these threads.

As it turns out scheduling

is a very touchy issue and things are dicey. So, we're probably in the standard going to

only supply two mechanisms for scheduling threads. One is a yield which says, "Now would

be a good time to switch to somebody else." And the other is a sleep which is, "I want

to go away for awhile." Those are adequate for a lot of applications. They won't be adequate

for all applications.

For those other applications we're going to give you a handle on the operating

system thread. So, you can ask us for your platform specific handle to a thread. And

then you can go off and do communicate with your operating system detailed scheduling

issues. We will also have a query to allow you to find out what the hardware concurrency

is on your system.

So this sort of gives you a measure of maybe what's the most number

threads you should fork off to be--to be helpful. It's a very vague number because hardware

is actually quite different. For synchronization, the base concept we have is a mutexe, which

similar to what POSIX and Windows provide. We're going to have a notion, a sort of exclusive

you know, writer lock and then we're going to have a reader/writer layer on that.

And

there are four--sort of four notions of locks in here and mostly those are still the same

exclusive, you know--single-thread lock versus reader/writer lock. The difference between

these layers is sort of your right to change from being reader to being a writer without

having to give up the lock. So that's what these convertible upgradable types of things

are. >> I understand the word upgradable, what

is convertible? >> CROWL: So that is you can convert from

being having only one person--only one reader can have convertible access.

And when he wants

to become a writer, he converts. >> What's upgradeable?

>> CROWL: So I have to sit down and look the API's every time I try to answer this question,

and I don't have those API's there. So it's subtle in the transition states and, and I

can't remember exactly which one happens here. But it's all centered around being able to

convert from read-access--shared--for you hold the read lock and being able to get,

get to the write lock without releasing it.

>> [INDISTINCT]

>> CROWL: Yeah. That sounds similar. So the observation was convertible a single reader

to multiple reader and upgradable is, I am the golden reader who can convert to writer.

Now, so thats a mutexe. On top of a mutexe, we have a lock.

And a lock is basically the

state of owning a mutexe--of controlling a mutexe. So a lock is typically done as a function-local

object. It's a local variable in your function, that provides both the acquire of the mutexe

and the release of the mutexe. And these are coordinated with the mutexes.

This is fairly

common in some of the C++ libraries to make sure that you get mutexe acquires and releases

paired up properly. You are not required to use these things. We also have condition variables.

The early versions of Windows did not provide condition variables. Later versions of windows

do, everybody whose programmed on Windows and Unix, pretty much agrees that the Windows

Advance are much harder to use than POSIX.

Condition variables, and so we're standardizing

on that approach. What condition variables allow you to do is upon grabbing a mutexe,

you've discovered the state isnt quite right for you to release the mutexe and have somebody

notify you when the state is something that is appropriate to you. And once you have these,

then you can write your code in the monitor pattern which has a fair amount of literature

behind it. And so here is an example of the conditions and where they get used in particular

they represent extreme states.

So for a buffer, we have conditions were the buffer is full

or not full and not empty. So we represent the extremes and then we can notify threads

when we reach extreme states. The next thing... >> The locks are non-reentrant?

>> The? >> The locks are non-reentrant?

>> The question is, "Are the locks are non-reentrant?" The answer is, that's unclear right now.

The

committee hasn't fully decided whether or not to provide non-reentrant locks only, reentrant

locks only or two locks with the option, so we haven't quite settled down that yet. So

the next topic we're going to talk about is thread termination. The first issue is voluntary

termination, so if you run off the end of the function that was used to start the thread,

you've terminated that thread. But there's a lot of applications where the natural thing

to do with a thread is to put it in a loop and waiting on some external event and you

want to shut it down some way.

So the application that doesn't terminate of it's own accord,

it wants to be told to terminate. And for that, we need some way to signal to the entire

application and all of its threads that it's time to quit. And the committee has a strong

opposition to asynchronous termination. Because asynchronous stuff going on in C++ is just

a nearly impossible to code properly.

So the committee has a strongly voted against any

form of asynchronous termination. So now, we're left back to synchronous termination.

And the only way to really make this happen is if in one thread I signal to another thread

that it's time to terminate and that second thread perceives that as an exception. So

let's take a little regression here and ask, "Well, what happens if a thread has an exception

that it doesn't handle? The exception runs off the top." Well, do you call std terminate

as you would if an exception popped out of main. Do you propagate the exception to the--to

some thread trying to do a join? Do you just ignore the exception? And the answer to that

is we haven't fully decided yet.

There's various issues either way you do it. Any way you do

it, you're going to make somebody unhappy. But we have sort of decided that what we need

very definitely is a way to manually propagate. So if we assume in the simplest case we just

call terminate, then we're going to need a way to manually propagate an exception from

one thread to the other.

And that in fact requires new language facilities because right

now, we have no way to sort of store in forward an exception. So what happens--we're back

to cancellation. We've already sort of mentioned that cancellation will perceived as an exception

in the second thread thats being told to cancel. The question is, "Will that thread

be ready for it? When we'll see that exception?" In the case where you're not ready for it

we have an API to say, "I'm not ready for any cancellations now." And so, any cancellations

will just sort to be noted and will not actually appear as an exception.

And then, when you

become ready to deal with the cancellation, you can then pick up that exception. Within

that range of things where you're not quite ready for one, you can explicitly test for

whether or not a cancellation is pending. And you can simply ignore things. But in the

normal case, you will get a exception.

But you won't get an exception at our arbitrary

points in the program. You will get an exception only at cancellation points. Places where

we know it's safe to cancel you. And those are based on this list here, just as synchronization

points where you're likely to be blocking or doing some other act like that.

So, what

comes out of all of these things is potentially instead of what you were expecting, an exception

saying, "You've been cancelled." There is however a problem in all of this. None of

those points included I/O operations. So, if you are blocking on I/O--a thread is blocking

I/O, you now have no way to cancel it and if the I/O it's waiting on, it doesnt show

up, it's going to be hanging there forever. So, we know this was a problem, partly its

a weaknesses in the current operating systems, because many of the current operating systems

dont guarantee that you will wake up from an I/O operation if you cancel a thread.

So,

the C++ Committee is trying to work it out in conjunction with some other committees

like POSIX, what we can do to make sure that we have some way to cancel threads that are

blocked on I/O. And we dont have a resolution on this yet. And then, going beyond what we're

putting into the standard. We want to try and enable libraries to be--to handle the

heavy lifting.

So most of what I've shown you here today is primarily the lowest layer

that most programmers will never see that they will work through libraries at a higher

layer. And the question is how do you get that higher layer? The first task is probably

things like thread pools and thread groups that the C++ Standards Committee will define

in terms of the technical report. There's still an open debate about how much of that

will go into the next standard, and how much will be deferred to the actual technical report?

But the issues for the deferring these things add up among them being we're not quite ready.

We dont know how much we want to a force early, we still need specification work, etc.

And the standard is looming very quickly. What would be count as success? Well, one

of the things is can we build libraries? And the answer to that is we believe that as of

today that we can build all of the facilities that we're trying to implement in the second

technical report using the facilities that have been proposed for the next C++ standard.

So we have sort of evidence that at least the first round of libraries can be implemented

with what's being defined in the standard.

And we believe then, that you can you pick

up and define your own set of higher level libraries beyond that. One of those examples

is futures, that we're kind of putting in the technical report, and basically what the

future says is, "go off and compute this function and later I will come back and ask for the

return value." The fourth join model that the standard is proposing doesn't provide

for return values, its all void. So, what happens if you want those return values? Futures

is a means to have that. What happens if the thread acts with an exception? You want to

be able to get that exception at the time you ask for the return value.

And the advantage

here is that now you have a simple mechanism to take sequential code and using the futures,

turn it into parallel code. And for those interested in reading the actual papers those

of the paper numbers. But we have a big hole right now in the standard. And that is the

proposed standard does not have a mechanism for lambda.

For the purposes of parallel and

compare programming, what we really need is basically an anonymous nested function. Something

like Auto Pascal. There's a group, we're late but we're trying to get this done in time

for the standard. So, if we can put that in, then, those lambdas provide you an extra layer

of functionality and capacity that really helps you write good libraries, so that you

can write a parallel four loop entirely in library code.

So, the conclusions are--the

basics are on track. Most of the core language stuff we could probably go with what we have

today. We are still fine tuning it, maybe trying to loosen the model in certain places,

so that we can support more loosely coupled hardware. And the basic model for launching

and dispatching threads and synchronizing all of that is there.

We are still working

on detailed syntax and detailed semantics, but we're basically on track there. Some of

the features need work like this destruction we talked about to make sure that we're right

there, making sure the--the exception propagation is solid and getting this cancellation lambda--the

higher level issues in. That's where we--where--where we've got the biggest work ahead of us. And

then of course the real value of all of this is not when we're done with the standard,

but when you pick up the standard and write the libraries, write the facilities, build

on top of what's in the standard and, you know, put it all on Open Source and so forth.

Question? >> You never talked about--dynamically allocated

atomic variables and locks [INDISTINCT] and what find to do this things coming to existence

and how do you resolve races between creation and destruction of atomic variables and their

operations? >> CROWL: Okay.

So the question is when these

atomic variable--dynamic allocation of atomic variables and locks do. And when do those

get handled? First, the atomic variables have what we are called trivial constructors. So

they come into existence basically either completely undefined because you failed to

provide an initial value, or they have a defined initial value. So there is no possible race

on creating them.

Once you have their name and can reference them, they're already initialized.

>> You can get race [INDISTINCT] let's say I use an arena allocator. I just deallocated

a piece of an object which has non-atomic variables which have [INDISTINCT] in stores

of... >> CROWL: Okay, so...

>> ...Then I created new objects with an atomic variable in there--there bring us operations

or not having [INDISTINCT] that they... >> CROWL: And so the questions is what happens

if you arena allocator and you are basically were using the same memory, right? The atomic

variable--the--the operations--the atomicity comes not with the initialization but with

the first atomic operation on it.

So anything you do before that first atomic operation

is prior history--sequential history. So that atomic operation, the reason that first atomic

operation is good is that the initialization is well defined and the initialization is

done before the atomic variable comes into existence. So the normal semantics is that

variables are dynamically initialized are zero initialized, before that variable's name

is released. And because they are zero initialized, we have a good state at the start of the atomic

variable.

Okay. Now for mutexes the story is different. Because most of the mutexes

from the operating system require that you had--do some initialization operation on the

mutex. And for that it has to be done by one thread and you have to make sure that you

don't let that--the name of that mutex out until after it is initialized.

So you do not

want to pass the address on until after you have initialized it. Now for destruction,

the atomic variables also have trivial destructors. So basically they are never destructed. They

are always valid.

They will be valid until the end of the address space. The mutexes

from the operating system, they also often have that same trouble of, you have to call

a specific function that says, "Okay, this mutex is now over with" and you have the same

problem there that you had initializations. Make sure of that you've taken away the address

from that mutex from all the other threads before you bring it down.

>> Can you--can you legally create objects like [INDISTINCT] objects which have mutexes

which delete themselves where the reference count was two to zero.

>> CROWL: The--the question is can you have reference-counted objects that delete themselves.

The answer is yes, you can. But you have to be careful to make sure that you--the reference

count in such that you do not let anybody else in.

So, so you have to set up, set things

up in the right order and--reference counted objects in an atomic world are not trivial

so... >> It's the mutex thats the problem.

>> Yeah, mutex can be handled because you can, you can undo the mutex while--while still

preventing access with an atomic variable. So you can use an atomic variable to stop

people from trying to do a double operation on the mutex. Question?

>> Is there effort to implement like we do on other sequel [INDISTINCT] on say, gcc?

>> Okay, so, so...

>> We would have--there is some support on

gcc already for some in this case. >> Yeah, the, the question--I'm going to expand

your question a little bit and the question is, is there a prototyping information of

efforts underway for any of this kind of stuff. The answer is that there--for the standard

in general there is a fair amount of prototyping effort going on within gcc to prove certain

higher level features are in fact implementable for the next standard. There hasn't been there

any prototype work for a lot of a lower level stuff here directly for the standard.

We--the

atomic stuff has been justified in part because a lot of the prior work out there is a very,

very similar in what we are standardizing. So, for instance the atomic operations there's

two lines of reasoning that say it's implementable. One is the paper that proposes it has the

bare minimum implementation in it. And then it points at the gcc and Intel underscore

in the sync primitives that say "The rest of it has already been implemented by gcc

and Intel compilers for this alternate syntax." Now, the one place were we have a real weakness

here is the implementation of the concurrent variable initializations and destruction.

There is, there is no implementation of that yet.

>> [INDISTINCT] >> Yes.

The question is maybe we can get that

going. And I would like to get that going and every time I sit down to start on it I,

I get interrupted and--so if somebody out there has some spare cycles and want to help

work on this, happy to hear from you. >> What does n2169 mean?

>> Oh, okay. So the question is what n2169 mean.

So the C++ standard works in terms of

working papers, people write a paper describing a need and a proposal and so forth. And then

that gets a document number and--you know we start at n1 and we're now at n2169 and

so that's the paper that is on some certain topic. This n2169 is a paper that's describing

all of the features that are being tracked for the next C++ standard. So all of the proposals

for the next standard, it's tracking all of them and giving you sort of an overview where

everything is right now.

And a subset of that is all the threads--all of the papers dealing

with multithreading. And unfortunately there is no overview paper for threads--you get

an overview for the entire standard or individual thread topics but not for the whole thread

domain. Any more questions?--Oh, thank you all for coming. [AUDIENCE APPLAUSE].

No comments:

Post a Comment